読んだもの、積読、これから買うもの、色々あるが備忘のため記録しておく

基本

↓2024/8/26発売予定

設計

↓2024/8/28発売予定

読んだもの、積読、これから買うもの、色々あるが備忘のため記録しておく

↓2024/8/26発売予定

↓2024/8/28発売予定

というわけで読了したので気づきや感想やらなんやらをつらつらと📝

著者のブログ blux.hatenablog.com

サンプルコードリポジトリ github.com

「Chapter 3 全体像の説明」と「Chapter 4 アプリケーションをKubernetesクラスタ上につくる」から一部を抜粋した記事 codezine.jp

※2024/06/05時点で正誤表に記載のないもので気付いたもののみ記載 www.shoeisha.co.jp

Hello, world! Let's learn Kubernetes! → Hello, world!-stdin(-i) → --stdin(-i)ユーザー名などを変更する可能性があるすべての設定情報に使えます。 → ユーザー名など変更する可能性があるすべての設定情報に使えます。今回は簡単のためにDeploymentの → 今回は簡単にするためDeploymentの「つくって、壊して、直して学ぶ Kubernetes入門」を読んだ🐳

ハンズオンが豊富で色々な気づきもあり、これからKubernetesを始めるという方の最初に読む本としてとても良いのでは??となる書籍でした!

会員特典データとして「ArgoCDでGitOps体験してみよう」のPDFがもらえるのでこちらも是非!

自宅のラズパイk8sクラスターにPrometheus Stackを生やしていく

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

$ helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "prometheus-community" chart repository Update Complete. ⎈Happy Helming!⎈

$ k create namespace monitoring namespace/monitoring created

$ helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack NAME: kube-prometheus-stack LAST DEPLOYED: Mon Jun 3 21:26:28 2024 NAMESPACE: monitoring STATUS: deployed REVISION: 1 NOTES: kube-prometheus-stack has been installed. Check its status by running: kubectl --namespace monitoring get pods -l "release=kube-prometheus-stack" Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

$ k get po NAME READY STATUS RESTARTS AGE alertmanager-kube-prometheus-stack-alertmanager-0 2/2 Running 0 49m kube-prometheus-stack-grafana-67997d8bd4-wnh9f 3/3 Running 0 49m kube-prometheus-stack-kube-state-metrics-657ddb7877-jcj26 1/1 Running 0 49m kube-prometheus-stack-operator-5975686646-bhk6m 1/1 Running 0 49m kube-prometheus-stack-prometheus-node-exporter-jc6qx 1/1 Running 0 49m kube-prometheus-stack-prometheus-node-exporter-n5hwh 1/1 Running 0 49m kube-prometheus-stack-prometheus-node-exporter-x6kz6 1/1 Running 0 49m prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 49m

自動生成されたServiceを使ってport-forwardする

$ k port-forward service/kube-prometheus-stack-grafana 8080:80 Forwarding from 127.0.0.1:8080 -> 3000 Forwarding from [::1]:8080 -> 3000 Handling connection for 8080 Handling connection for 8080

http://localhost:8080/login へアクセスしてログイン画面が出ることを確認

デフォルトで以下のログイン情報となっているのでログインしてみる

| username | password |

|---|---|

| admin | prom-operator |

なんて簡単にできるんだ...(感動

自宅のラズパイk8sクラスターでHPAを利用できるようにしようと思い立ち、metrics-serverをインストールしてみたものの、metrics-serverが起動しなかったのでトラブルシュートしてみた

手順に従いインストールコマンドを実行

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

READYが0/1のまま...

k get deployment metrics-server -n kube-system NAME READY UP-TO-DATE AVAILABLE AGE metrics-server 0/1 1 0 10h

metrics-serverだけREADYにならない

k get po -n kube-system NAME READY STATUS RESTARTS AGE coredns-7db6d8ff4d-67gfj 1/1 Running 0 8d coredns-7db6d8ff4d-xlznj 1/1 Running 0 8d etcd-k8s-master01 1/1 Running 0 8d kube-apiserver-k8s-master01 1/1 Running 0 8d kube-controller-manager-k8s-master01 1/1 Running 0 8d kube-proxy-dww28 1/1 Running 0 8d kube-proxy-lb9h2 1/1 Running 0 8d kube-proxy-t9lsn 1/1 Running 0 8d kube-scheduler-k8s-master01 1/1 Running 0 8d metrics-server-7ffbc6d68-pqtdt 0/1 Running 0 10h

kubelet Readiness probe failed: HTTP probe failed with statuscode: 500

Readiness probeが失敗してしまっているようだ

k describe po metrics-server-7ffbc6d68-pqtdt -n kube-system

Name: metrics-server-7ffbc6d68-pqtdt

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Service Account: metrics-server

Node: k8s-worker01/192.168.1.111

Start Time: Wed, 29 May 2024 23:51:51 +0900

Labels: k8s-app=metrics-server

pod-template-hash=7ffbc6d68

Annotations: <none>

Status: Running

IP: 10.244.1.73

IPs:

IP: 10.244.1.73

Controlled By: ReplicaSet/metrics-server-7ffbc6d68

Containers:

metrics-server:

Container ID: containerd://603b5f91968f0afb410fa3a49786c563bd26f87de59c11ce1d4822e743a7fa29

Image: registry.k8s.io/metrics-server/metrics-server:v0.7.1

Image ID: registry.k8s.io/metrics-server/metrics-server@sha256:db3800085a0957083930c3932b17580eec652cfb6156a05c0f79c7543e80d17a

Port: 10250/TCP

Host Port: 0/TCP

Args:

--cert-dir=/tmp

--secure-port=10250

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

--kubelet-use-node-status-port

--metric-resolution=15s

State: Running

Started: Wed, 29 May 2024 23:52:00 +0900

Ready: False

Restart Count: 0

Requests:

cpu: 100m

memory: 200Mi

Liveness: http-get https://:https/livez delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get https://:https/readyz delay=20s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/tmp from tmp-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6szcf (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

tmp-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-6szcf:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 4m41s (x4114 over 10h) kubelet Readiness probe failed: HTTP probe failed with statuscode: 500

k logs metrics-server-7ffbc6d68-6srfk -n kube-system I0530 03:08:07.651554 1 serving.go:374] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key) I0530 03:08:08.554701 1 handler.go:275] Adding GroupVersion metrics.k8s.io v1beta1 to ResourceManager I0530 03:08:08.686371 1 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController I0530 03:08:08.686459 1 shared_informer.go:311] Waiting for caches to sync for RequestHeaderAuthRequestController I0530 03:08:08.686466 1 configmap_cafile_content.go:202] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::client-ca-file" I0530 03:08:08.686518 1 shared_informer.go:311] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file I0530 03:08:08.686517 1 configmap_cafile_content.go:202] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file" I0530 03:08:08.687032 1 shared_informer.go:311] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file I0530 03:08:08.689211 1 dynamic_serving_content.go:132] "Starting controller" name="serving-cert::/tmp/apiserver.crt::/tmp/apiserver.key" E0530 03:08:08.689974 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.101:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.101 because it doesn't contain any IP SANs" node="k8s-master01" I0530 03:08:08.690832 1 secure_serving.go:213] Serving securely on [::]:10250 I0530 03:08:08.691791 1 tlsconfig.go:240] "Starting DynamicServingCertificateController" E0530 03:08:08.692009 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.112:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.112 because it doesn't contain any IP SANs" node="k8s-worker02" E0530 03:08:08.701807 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.111:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.111 because it doesn't contain any IP SANs" node="k8s-worker01" I0530 03:08:08.786953 1 shared_informer.go:318] Caches are synced for RequestHeaderAuthRequestController I0530 03:08:08.787115 1 shared_informer.go:318] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file I0530 03:08:08.788043 1 shared_informer.go:318] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file E0530 03:08:23.685957 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.112:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.112 because it doesn't contain any IP SANs" node="k8s-worker02" E0530 03:08:23.692988 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.111:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.111 because it doesn't contain any IP SANs" node="k8s-worker01" E0530 03:08:23.695203 1 scraper.go:149] "Failed to scrape node" err="Get \"https://192.168.1.101:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 192.168.1.101 because it doesn't contain any IP SANs" node="k8s-master01" I0530 03:08:36.493054 1 server.go:191] "Failed probe" probe="metric-storage-ready" err="no metrics to serve"

ログからmetrics Serverが起動しない原因は、Kubeletの証明書がIP SAN(Subject Alternative Name)を含んでいないため、TLS検証が失敗していることっぽいことがわかったのでTLS検証を無効化していく

というわけで現状自宅のラズパイk8sクラスターはkubelet証明書がクラスター認証局に署名されていないのでargsに--kubelet-insecure-tlsを渡して証明書の検証を無効化していく

k edit deploy metrics-server -n kube-system

template: metadata: creationTimestamp: null labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=10250 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls # ←こいつを追加

k get po -n kube-system NAME READY STATUS RESTARTS AGE coredns-7db6d8ff4d-67gfj 1/1 Running 0 12d coredns-7db6d8ff4d-xlznj 1/1 Running 0 12d etcd-k8s-master01 1/1 Running 0 12d kube-apiserver-k8s-master01 1/1 Running 0 12d kube-controller-manager-k8s-master01 1/1 Running 0 12d kube-proxy-dww28 1/1 Running 0 12d kube-proxy-lb9h2 1/1 Running 0 12d kube-proxy-t9lsn 1/1 Running 0 12d kube-scheduler-k8s-master01 1/1 Running 0 12d metrics-server-d994c478f-8f7hh 1/1 Running 0 23m

k get deploy -n kube-system NAME READY UP-TO-DATE AVAILABLE AGE coredns 2/2 2 2 12d metrics-server 1/1 1 1 3d10h

というわけで無事起動させることができた!

コンテナセキュリティの国際的なガイダンス

コンテナに関するセキュリティ上の考慮ポイントが網羅されている csrc.nist.gov

Aqua Security Software社が提供する脆弱性ツール

コンテナOSパッケージやプログラムの各言語に関連した依存関係などをスキャンしてくれる github.com

DockerfileのLintツール

DockerのベストプラクティスやShellCheckによる静的解析ツールのチェック項目が組み込まれている

ビルドされたイメージに対して、DockerのベストプラクティスやCIS Docker Benchmarksで求められる項目をチェックしてくれる

Kritis Signer はオープンソースのコマンドライン ツールで、構成したポリシーに基づいて Binary Authorization 証明書を作成できるツール

Googleが中心となって開発したOSS

特定のコンテナランタイムに依存しないコンテナイメージビルドが可能

kanikoを利用してビルドするとDockerfileの各コマンドごとにそれぞれスナップショットを作成&レイヤーとして保存してイメージを作成してレジストリにプッシュする

レイヤーごとに作成されたイメージ情報は次回ビルド時にキャッシュとして利用できるのでレイヤの変更がなければビルド時間の短縮ができる

現状、公式配布のベースイメージかどうかのチェックができるツールはないので、以下をCIに組み込むことで対応できる

docker search --filter is-official=true <IMAGE_NAME>

Hadolintでも関連IssueがあるのでHadolintで対応される日が来るかも?

Kubernetes運用関連で自身が遭遇した事象への対処の備忘録としてTips集的にエントリを育てていく

まれにkubectl delete podsしてもPodが消えてくれないことがあり、その時は--grace-period=0, --forceオプションを付けることで強制的に削除することができる。

kubectl delete pods {$pod_name} --grace-period=0 --force

この方法はkubeletからの確認を待たずに強制的に削除するので、Podのプロセスが完全になくならなかったりほかのリソースとの競合などが起こりえる。 この方法を常に行うような状況の場合は原因を追究する必要がある。

| Option | Description |

|---|---|

--grace-period=0 |

正常に終了するためにリソースに与えられる秒単位の時間。負の場合は無視されます。即時シャットダウンするには 1 に設定します。 --force が true (強制削除) の場合にのみ 0 に設定できます。 |

--force |

true の場合、API からリソースを直ちに削除し、正常な削除をバイパスします。一部のリソースをすぐに削除すると、不整合やデータ損失が発生する可能性があるため、確認が必要であることに注意してください。 |

kubectlコマンド実行時に以下の様なエラーで接続できない場合、証明書の不一致が原因である可能性が高い

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

kubeconfigを確認して実行元で持っている情報と差分がないかを確認する

今更ながら読了📕

— 𝕋𝕠𝕔𝕪𝕦𝕜𝕚 𝕏 (@Tocyuki) 2024年4月6日

学びや共感も多く、サクサク読めてしまった。批判文化についてはほんとそうだよなぁとしみじみ。

『世界一流エンジニアの思考法 (文春e-book)』(牛尾 剛 著) を読み終えたところです https://t.co/fClvMjiLL1 pic.twitter.com/PQS45gr9Hy

というわけで世界一流エンジニアの思考法を読了したので今更ながら備忘録的な書評を書いてみる📕

この書籍は米マクロソフトシニアエンジニアでAzure Functionsの開発者である牛尾剛さんが執筆した書籍

牛尾剛さんのnoteやブログがきっかけに発足した執筆プロジェクトとのことでnoteの記事もちょこちょこ見てみたがとてもおもしろいので興味があればこちらも是非!

自分が読んでいて学びになったことをつらつらと

「世界一流エンジニアの思考法」を読んだ!

全体的にとても学びと共感が多かったが、「第3章 脳に余裕を生む情報整理・記憶術」では昨年読了した書籍「プログラマ脳」にも通ずる話も多く合わせて読むとより理解が深まりそうだと思った。

この書籍の売れゆきがとても好調で牛尾さんのメディアへの露出が激増していたのだが、その中でもけんすうさんとの対談が個人的に面白かった。

また248ページという量でサクッと読めるのでとてもおすすめです!

ここに全部書いてあった... zenn.dev

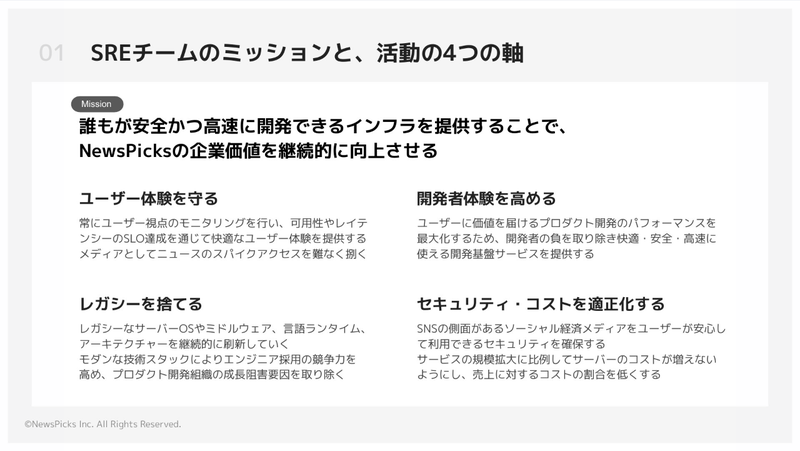

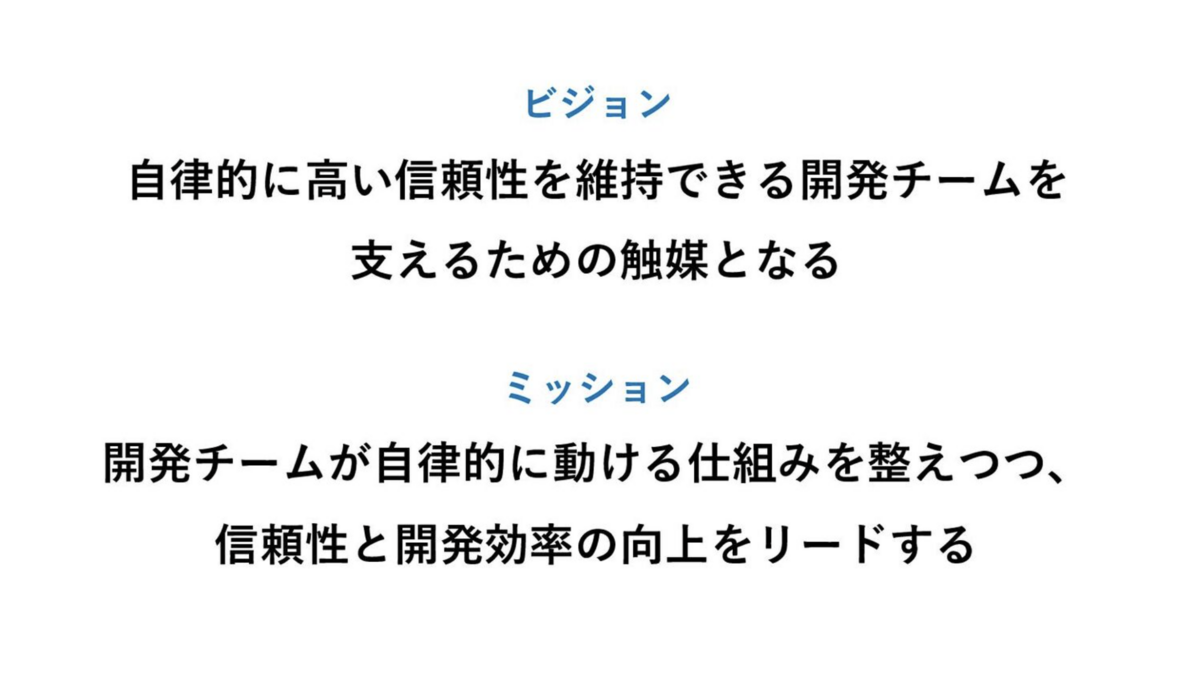

現職のSREチームのMVVを検討し始めてるので他社のMVVについて調べてみる

delyが持つ熱量と幸せをできるだけ多くの人に届けられるように、プロダクトの価値最大化をシステム運用において実現する。 その過程において最善の選択が何であるかを考え、システムの設計と運用を改善し続ける。

ユーザーにたくさんの価値を素早く提供できるようにしながら、事業の成長を阻害する要因を取り除く

SRE化を通して、Developer Experienceの改善、事業の拡大への対応、お客様に信頼されるサービスの提供を実現する

明確なミッションが書かれているわけではないが参考になるので貼っておく

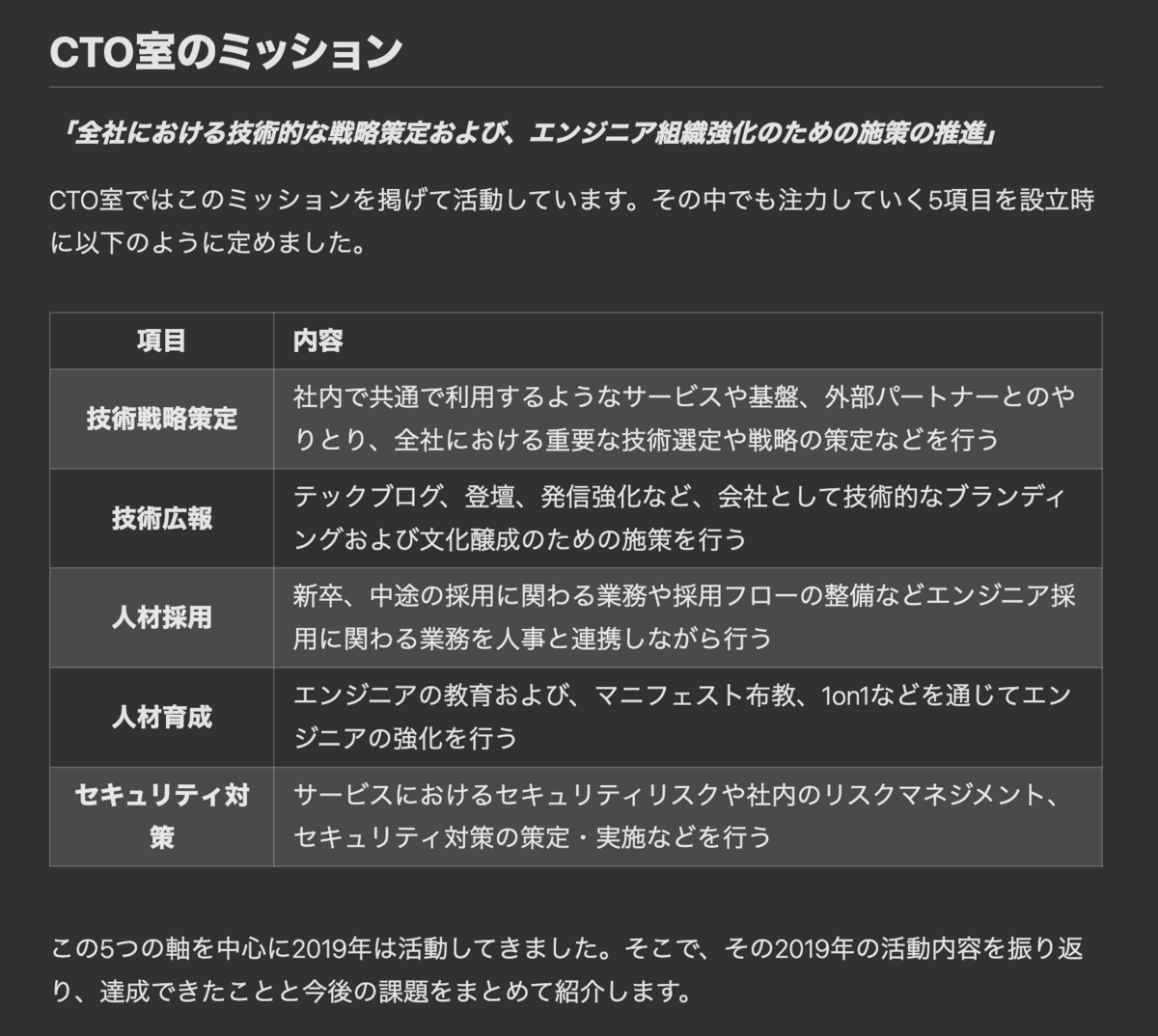

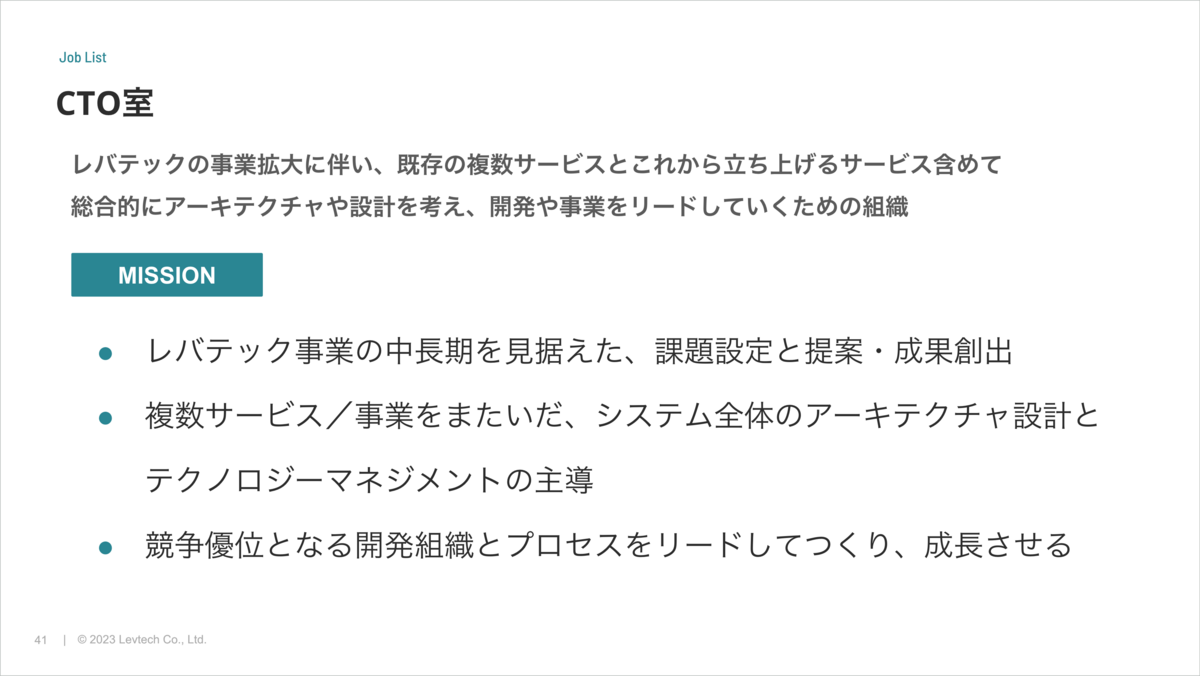

今所属している会社にもCTO室があるが、他の会社がどのような背景・目的・役割でCTO室を設けているのか気になったので調べてみる。 インターネットで雑に検索して出て来たものをいくつかピックアップし、設立の背景・目的、ミッションなどで共通項があるかなどを見てみる。

VPoEが2018年度に担っていた動きをチームとして対応できるようにするためにCTO室が設立されました。

詳細は不明

CTO室は2019年5月にできました。前身はモンストの開発・運用をしていた事業本部内にて、複数のサービスに関わるエンジニアを集めてできた開発本部の下部組織でした。現在は事業部を横断した開発者支援や、事業部内のサービス、プロジェクトに入って個別支援を行っています。

CTO室は主に以下の4つのミッションがあります。 1つ目の「サービス技術の設計、開発、保守の研究および開発」というミッションでは、最新の技術動向を追跡し、それを調査・検証しています。これにより、サービスの価値向上をリードする役割を果たしています。 次に、「ものづくり人材の育成」というミッションは、社内エンジニアの育成に重点を置いていますが、同時に渋谷区などの高等専修学校への教育サポート活動も行っています。 「QAの改善活動」は、サービス品質の向上だけでなく、各事業部に継続的な改善プロセスや手順を提供し、その実践を支援することをミッションとしています。 最後に、「データのKPI設計や分析の支援」では、データ活用に関する考え方やアプローチを企業全体に展開しています。KPI設計やデータ分析を通じて、サービスのパフォーマンスを評価し、事業部や経営層の意思決定をサポートしています。

事業会社ですから、個々の機能のリリースや突発的なパフォーマンスの改善などのほうが、どうしても優先されます。その都度いろいろなチームにヘルプとして呼ばれる、というような状況でした。正直、それだと横軸の改善作業は全然進まない状態だったんですね。ですから、いわゆる全社的な改善作業をするチームを切り分けようというのが、CTOの松下さんが、これまでのプロダクト本部とは別にCTO室という組織を立ち上げた意図だと思います。

CTO室の役割は、課題を自分たちで見つけてきて、それを改善することです。課題はたくさんあるので、できることから手をつけていくしかありません。その際に意識しているのは、「カオナビ」の開発効率が上がって、より顧客に対する価値提供をスムーズに行えるようになることです。逆に言えば、そこさえブレなければ手法は何でもいい。

不明

CTO室では、インフラやセキュリティなどのいわゆる共通基盤や事業部のミッション外ではあるが会社として必要な技術領域の取り組みを行なっています。

CTO就任とともに発足?

簡単に言えば、CTOの想いを実現させる部署です。CTOが就任した後、弊社ではDMM Tech Visionを発表しています。私たちがやるべきことは、その実現です。そのために、現在は3つのチームに分かれて活動をしています。 まずは、制度設計やブランディングを担当するエバンジェリストチーム。技術のスペシャリストで結成されたチームで、人事と接点を持ちながらエンジニアのスキルアップを支援したり、技術広報をしたりと、対外的な活動も行います。 そのほかに、事業支援チームと技術支援チームがあります。事業支援チームは、読んで字のごとく事業部に対して技術的な側面から事業支援を行う組織です。コードを書くなど実務的なサポートもしますが、事業成長に貢献することをミッションに置いており、組織開発からシステム開発までなんでもこなします。 技術支援チームの役割は、社内エンジニア向けの共有ツールを開発したり、自社運用していたシステムのクラウド化を推進したり、全社単位での技術支援を行うことです。基本的にはこの3チームが力を合わせ、DMM.comのテックカンパニー化を推進しています。

レバテックにおけるシステム・データの課題を解決をリードしていくため

ちなみに、CTO室設立の背景として、ベルフェイスの開発環境がお世辞にも整ったものではなかったということが挙げられます。上記の3点に取り組み、CTOや開発メンバーがやりたいと思ったことを実現するための環境づくりを行うことで、プロダクト作りを推進しています。

端的に言うと以下の3つに取り組んでいる組織です。 1.システムの安定稼働 2.開発プロセスの健全化 3.無理無駄の撲滅 総じてリーンでアジャイルな開発体制を確立し、QCDFを向上させることに注力しています。

僕がCTOになって仕事を抱えすぎて辛そうにしているのを見て、社長の佐藤が、heyの中にいるCTO経験者を集めてチームにしてみてはどうかと提案してくれました。どの会社にも、会社を横断した課題を解決する箱があるものです。heyの場合はプロダクトごとに組織がわかれていますが、目指していることはひとつ。横断だからできることや、個別のチームだけでは解決できないことをCTO室の裁量で解決するためにこのチームができました。

事業的に目指すところがある程度わかっていて、未来の目標も共有できているメンバーが集まって、さまざまなことを解決しています。例えば、組織マネジメントの課題として、今の組織の形をどうしたらもっと良くできるか、などです。他にも、採用基準、評価、査定、異動など。組織を運営している上で起こる、事務的でもあり、難しい判断が必要なことを解決しています。 また、技術的に横断でやったほうがいい仕事もここで話し合っています。モバイルアプリや、インフラなどのテクノロジーをどう考えるか、などです。

DB分割のためのチームを作ったことがきっかけでCTO室という組織を作りました。

全社的な、あるいは複数のサービスにまたがるような技術的負債の返済を主なミッションとした組織です。各サービスチームの意思決定はどうしても個別最適な意思決定になりがちなので、時には全体最適で意思決定して取り組む必要がある課題も出てきます。 私はCTO室を作って以来、全体最適の取り組みにアサインするべきリソースをCTO室で確保するようにしていて、タイミングによって増減はありますが、おおむね10-15%ぐらいのエンジニアにCTO室で働いてもらってます。 各サービスの開発チームにアサインされているエンジニアの人事権は私にはありませんが、CTO室のリソースは全社的な課題にアサインしたり、その時に最もフォローが必要なサービス開発チームを支援したり等の調整弁になっていて、リソース調整のスピードと柔軟性を高めることに役立っています。

各社のCTO室について設立の背景やミッションについて調べてみた。 当たり前に各社様々な目的や背景、ミッションを持っているが大枠では「全社横断で解決すべき課題を解決するための組織」という部分が共通しているのかなと思った。

これらの調べてた内容を元に自組織の運営に活かしていきたい。

油断していたら2022年の振り返りをすっ飛ばし、2023年の振り返り記事を上げる前に年を越してしまった。 ここ数年は個人的にかなり濃密な日々となっているのでしっかり振り返って、来年の抱負も掲げてしっかりやっていきたい。

過去の振り返りとか見ていると、かなり忘れている出来事も多く、そして掲げた目標もほぼ達成できてなくてなんて適当なやつなんだと笑えてくるが日々楽しく幸せな人生を過ごせているし、奥さんとも仲良しだし、着実に成長も実感出来ているし、2023年も大いに飛躍出来た一年となった(?)のでヨシ!!!

しかし自分は本当にある意味場当たり的に一生懸命生きている運の良いだけの人間なのだなぁと痛感し、持たざる者であることを自覚し、これからもできる範囲で頑張らないといけないなぁ、という気持ち(ガンバレ

まず仕事、登壇&コミュニティ活動という切り口で出来事やイベントを羅列し、それぞれ印象的な出来事を中心に深掘りながら最後に総括という形で全体を振り返っていこうと思います!

年始早々(1/3〜1/9)CES視察と称した自身初となるアメリカを出張という形で訪れることになった。 これも初となるホノルルで10時間以上あるトランジットを正月からヨットで回遊しながら休憩ポイントでは船上でハンバーガーを作って食べたり海で泳いだりして満喫し、そこからLAへ行きグランドキャニオンのツアーへ参加したり、戻ってからは初カジノで$1,100勝ちからの$500負けでフィニッシュしたり、CESへも参加したり、シルクドソレイユの「O」を観覧したり、フェニックスではWaymo、サンフランシスコではGMクルーズのレベル4自動運転による無人タクシーを体験したりと本当に色々と貴重な体験をすることが出来た。

また2023年はIT健保のレストラン巡りと保養施設でのワーケーションがQOLを高めてくれた。特にIT健保寿司として名高い「一新」や和食レストラン「木都里亭」とチーム合宿と称して行ったトスラブ箱根和奏林はめちゃくちゃ良かった。IT健保加入してるエンジニア諸氏におかれては是非訪れてほしい。

IT健保の素晴らしいレストラン、保養施設について、また活用戦略については前職のマイメンが記事を書いてくれているのでこちらも是非参考にしてほしい。

2023年最大のイベントは何と言ってもやはり転職だった。

前述の通り、8月にイオンスマートテクノロジー社へCTOのリファラルで転職した。

SREとしてはチームの状態を整えるために詳しくは書けないが色々と精神をすり減らしながら奔走した5ヶ月だった。 中々の苦行だったが種まきを何とか年内に終わらせることが出来たので、2024年はしっかりと成果を上げていきたい。

詳細が気になる方は飲みに誘っていただければベラベラ喋ると思いますのでお誘いお待ちしております🍺

また、中に入ってみて内部への働きかけより外部からのプレゼンスを高めることが色々と近道だと判断し、テックブログやDevRelチームの立ち上げ、アドベントカレンダーの実施などにチャレンジをした結果、これらの活動を通じてエンジニア組織としてのプレゼンスをかなり高めることができた。 特にDevRelチームは増幅拡大の装置としては申し分ない機能を持たせることができたので、今年は採用活動と組織改革を本格的に推進していき強固な地盤固めを行う一年としていきたい。

関連記事は以下

俺の役割 is 何?という風に感じられる方もいると思うが、前向きな言い方をすると必要なことであれば何でもやれるフェーズであるということです。

2023年はコミュニティ活動を通じて色々な人と接点を持ったり仲を深める事ができた。 今までコミュニティに関わることで人生が変わった、というような人の話を聞く事がよくあったが、昨年はまさに自分の人生も様々なコミュニティを通じて拡張されていく感覚があった。 今では自分の言葉として「コミュニティに関わることで人生が変わった」と言えるようになったと思う。

ハイライトとしてはCloudflare Meetup Naganoを主催(?)し、長野の技術コミュニティの方々と繋がることが出来たり、NRUG SRE支部メンバーで共著で書籍を出版したり、JAWS-UG コンテナ支部 #24 ecspresso MeetUpでは敬愛するfujiwaraさんやsongmuさんと一緒にお酒を飲んだり、Cloud in the Camp 勝浦で色々な人と交流できたあたりだと思う。

また例年よりも多くの様々なイベント(登壇や主催以外の)へオンライン、オフライン問わず参加したような気がする。

引き続き自分の人生をより豊かにし、学びを得るため、また還元するためにも積極的にコミュニティ活動やイベントへ参加して行こうと思う。

2022年もそうだったが、2023年も本当にイベントや変化の多い一年になった。

なんと言っても転職したことによる変化が一番大きかったが、仕事的にはこの一年で初めてアメリカと中国へ海外出張に行ったり、本を出版したり、ワーケーションと称して伝説のトスラブ箱根和奏林に行ったり(マジ最高だった)、プライベートでは千葉と四国へ家族旅行したり、消防団やサッカーのコーチを始めたり、マイカーを購入したりと人生においても記憶に残るであろう良い経験となるイベントが目白押しでとても良い一年となった。

転職して大変な時期もあったりしたが、あの手この手でなんとか光が見える状況にはなって来たので今年は方針策定して大きな成果を創出していきたい。

ここ数年は仕事のみならず自分の戦略・戦術がハマることが多いのだがディテールが甘かったり大雑把な部分は散見されるのでそういった部分は改善していき、より良い人生を歩めていけるように精進していきたい。

個人的な今年の抱負としては以下についてそれぞれ習慣化のための施策を遂行できるように頑張っていきたい。 2024年は継続力をキーワードに習慣化を進めていきたい(自信ないけど)

全体の共通施策として「毎週日曜に振り返りと計画を行う」ことを継続していきたい。

主に技術書が対象になると思うが、以下のような施策をしっかりと遂行していきたい。

後述するフォーカスすべき技術領域の学習にも関係するが、年間でどれぐらい何を読むかをある程度計画し、読み終わったら書評を書くなどアウトプットとセットで読むようにする。 また、会社のメンバーを巻き込んで輪読会を実施しチーミングも兼ねて相互で学習する仕組みを作り上げる。

読書管理にはブクログを活用するようにする。

自分は今までなんでもできるようになりたいという気持ちが強く、学習対象もかなり散漫だったが今後は明確に以下の領域に絞り、Infra/DevOps/SRE/PlatformEngineering領域を強みとしたエンジニアとしてしっかり市場価値も上げていき、会社・事業にもコミットしていきたい。

どのように学習していくかは基本的にはインプットとアウトプットになるわけだが、その主軸は以下だ。

すでに今年もデブサミへの登壇も決まっていたり、TiUGやAEON Tech Talkなどのイベント開催も予定しているのでそれらのイベントやSRE Next、PlatformEngineeringMeetup、CNDTなど大きなカンファレンスでの登壇にもどんどん挑戦していきたい。

そして今年はISUCONにも挑戦したい!!

月並みだが以下のアプリでの学習を毎日やる!

とりあえず意識しながら無理せずという感じで頑張りたい。

とまぁ、こんな感じで雑に振り返ってみたり抱負を述べたりしましたが意味があるかどうかは今後の自分次第だけど良い機会にはなったので引き続き今を一生懸命生きつつ、ほどほどに頑張りたいと思います!

2月には納車、6月には4人目となる子供が産まれる予定(!?)なので今年もイベント目白押しな予感!!

色々教えてもらったので備忘録

製品欄のAzure Kubernetes Serviceにチェックをいれた状態のURL

非推奨のAPIの使用を確認できるKubernetes API deprecationsが便利そう

AKS は、すべてのクラスターと顧客に影響を与える一連の修正プログラム、および機能とコンポーネントの更新プログラムを毎週リリースします。 ただし、これらのリリースは、Azure の安全なデプロイ プラクティス (SDP) により、最初の出荷時点からすべてのリージョンに展開されるまで最大 2 週間かかることがあります。 お客様のリージョンが特定の AKS リリースの対象となっている場合にそれを把握することが重要であり、AKS リリース トラッカーは、これらの詳細をバージョンとリージョン別にリアルタイムで提供します。

定期的に読むと良さそう

日本語化されているものがオススメ

MS真壁さん作

とりあえずAKSのセットアップができたのでArgoCDをデプロイしてみる

とはいえ基本的には公式に記載されていることをするだけでデプロイできた(k8sすごい)

$ kubectl create namespace argocd $ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

お手軽に接続して確認するためにPortFowardingを実施

$ kubectl port-forward svc/argocd-server -n argocd 8080:443 Forwarding from 127.0.0.1:8080 -> 8080 Forwarding from [::1]:8080 -> 8080

https://localhost:8080/ へ接続するとArgoCDのログイン画面が表示されることを確認

argocdコマンドで初期パスワードを取得する

$ argocd admin initial-password -n argocd XXXXXXXXXXXXXXX ←ここに初期パスワードが表示される This password must be only used for first time login. We strongly recommend you update the password using `argocd account update-password`.

先ほど取得したパスワードとadminユーザーを入力してログインできた